During my configuration adventures I have multiple Distributed switches across my cluster. I am going to try to migrate one of my host's connections from one dswitch to another.

I suppose there are a number of ways I could do this, but I'm going to first start by going to the switch I want to move the connections to, and kicking off the wizard.

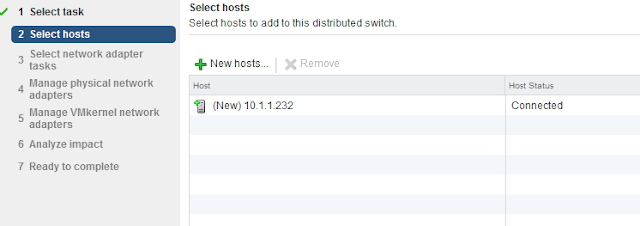

- Actions->Add and Manage Hosts

- Click on the big green plus sign, and choose the host I want to add, here it is once I pick from the list.

- Check the first two boxes.

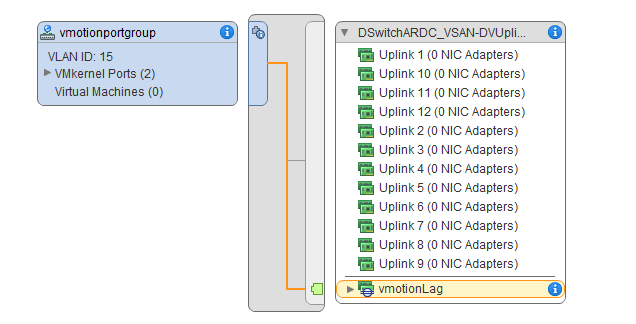

- I'll need to re-assign the uplinks for the ports on Dswitch_Demo, to this switch DswitchARDC_VSAN.

- Choosing vmnic0 and Assign uplink, I'm going to put these on my previously setup lag ports 4 and 5.

- That looks right, lets see what happens next.

- Here Vcenter is complaining that I'm going to leave vmk2 high and dry, but that's ok. He will be deleted after this. Actually I need to move vmk2 as well, but I messed up. see below :)

- This next screen checks impact. None here, so ok.

- And one more pointless status page from vcenter before we complete.

- Wait a tick, that's not right! Where is my vmk2? I guess I left it back on the other switch. Let me go back into the wizard and see if I can move that over too.

- This time I picked "Manage Host Networking" instead of Add Host.

- Just need to move the vmk, so only check "Manage VMKernel Adapters" on the next page.

- Here is the page I must've skipped last time. Guess I need to pay attention to DETAIL!

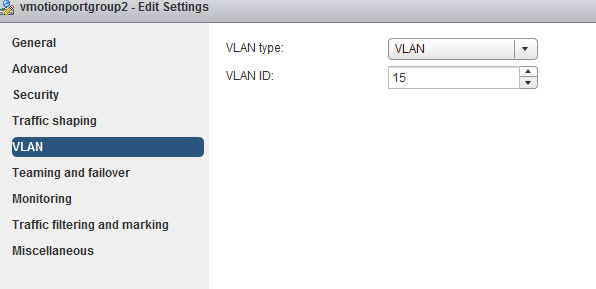

- Ok, so I selected vmk2, Clicked on Assign port group, and chose the portgroup for my new dswitch. Here is the end result.

- Here goes nothing, hope it works.

- But first...analyze impact...show summary. (so verbose)

- So, when you're done the screen just disappears, so I guess you have to dig back into the app to verify changes.

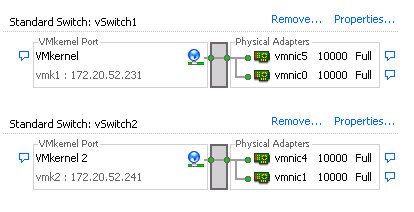

- This looks almost right. For some reason the link light isn't on for my vmk2 on 172.20.52.242 (The vmk I just moved).

- Well, I guess this is just another shortcoming of the web client. I refreshed the browser and it is "lit" now. Let's run a vmotion and see what happens.

- Wow, that was fast! Vmotion test succeeded. Looks like I'm good to go.